MCP 入门案例

目录

警告

本文最后更新于 2025-04-10,文中内容可能已过时。

快速入门

安装 UV 工具

|

|

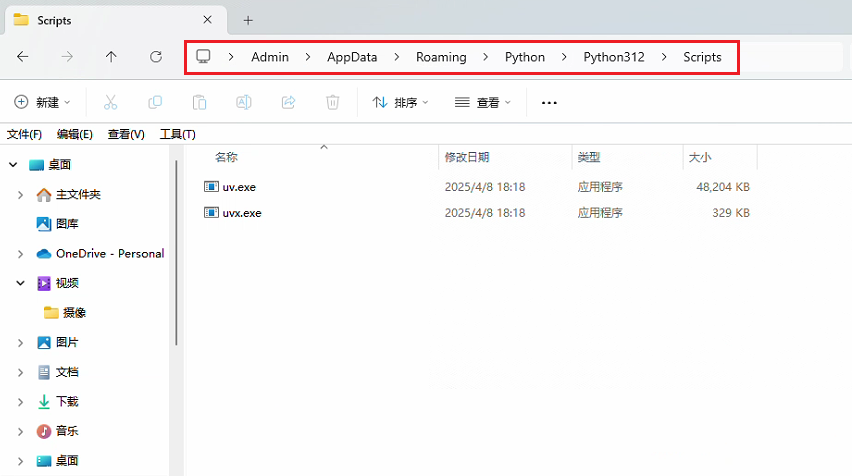

找到 uv.exe 所在的目录,复制路径 C:\Users\Admin\AppData\Roaming\Python\Python312\Scripts 添加系统环境变量的 path 中。

图1)在 dos 窗口中输入 uv。

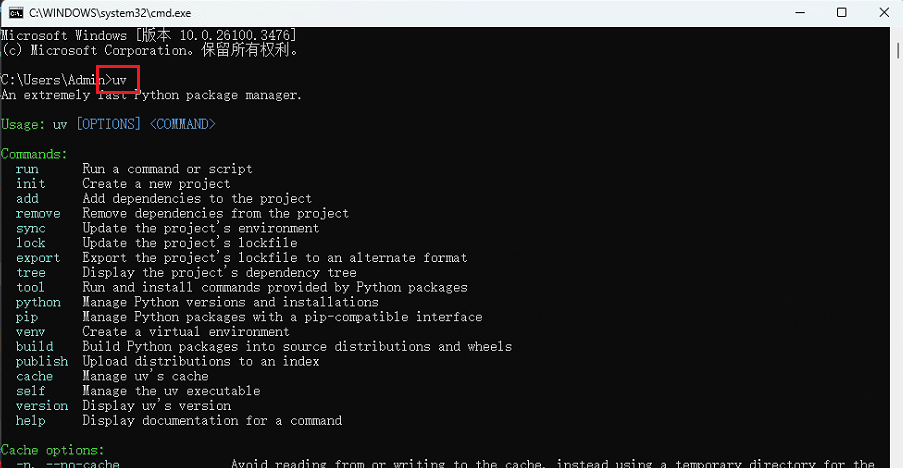

图2)MCP Server 入门案例

|

|

图3)personalInfo.py

|

|

personal.csv

|

|

MCP Client 入门案例

我们的 client 和 server 在一个项目中。

|

|

client.py

str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "system",

"content": "你是一个智能助手,帮助用户回答问题。"},

{

"role": "user",

"content": query

}

]

# 可用的工具

response = await self.session.list_tools()

print("可用的工具列表:", response)

available_tools = [

{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"parameters": tool.inputSchema # 确保这是有效的 JSON Schema

}

}

for tool in response.tools

]

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

content = response.choices[0]

if content.finish_reason == "tool_calls":

tool_call = content.message.tool_calls[0]

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# 执行工具

result = await self.session.call_tool(function_name, function_args)

print(f"\n\n[Calling tool {function_name} with args {function_args}]\n\n")

# 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中

result_content = result.content[0].text

messages.append(content.message.model_dump())

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": result_content,

})

# 将上面的结果再返回给大模型用于生产最终的结果

print("messages===>", messages)

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

return response.choices[0].message.content.strip()

return content.message.content.strip()

async def chat_loop(self):

"""Run an interactive chat loop"""

print("\nMCP Client Started!")

print("Type your queries or 'quit' to exit.")

while True:

try:

query = input("\nQuery: ").strip()

if query.lower() == 'quit':

break

response = await self.process_query(query)

print("\n" + response)

except Exception as e:

print(f"\nError: {str(e)}")

async def cleanup(self):

"""Clean up resources"""

await self.exit_stack.aclose()

async def main():

if len(sys.argv) < 2:

print("Usage: python client.py ")

sys.exit(1)

client = MCPClient()

try:

await client.connect_to_server(sys.argv[1])

await client.chat_loop()

finally:

await client.cleanup()

if __name__ == "__main__":

import sys

asyncio.run(main()) 0xc00118b380}">

|

|

.env

|

|

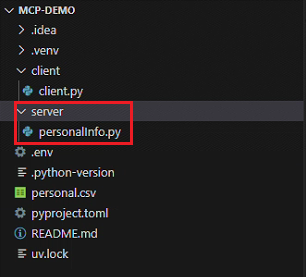

调试

启动命令

|

|

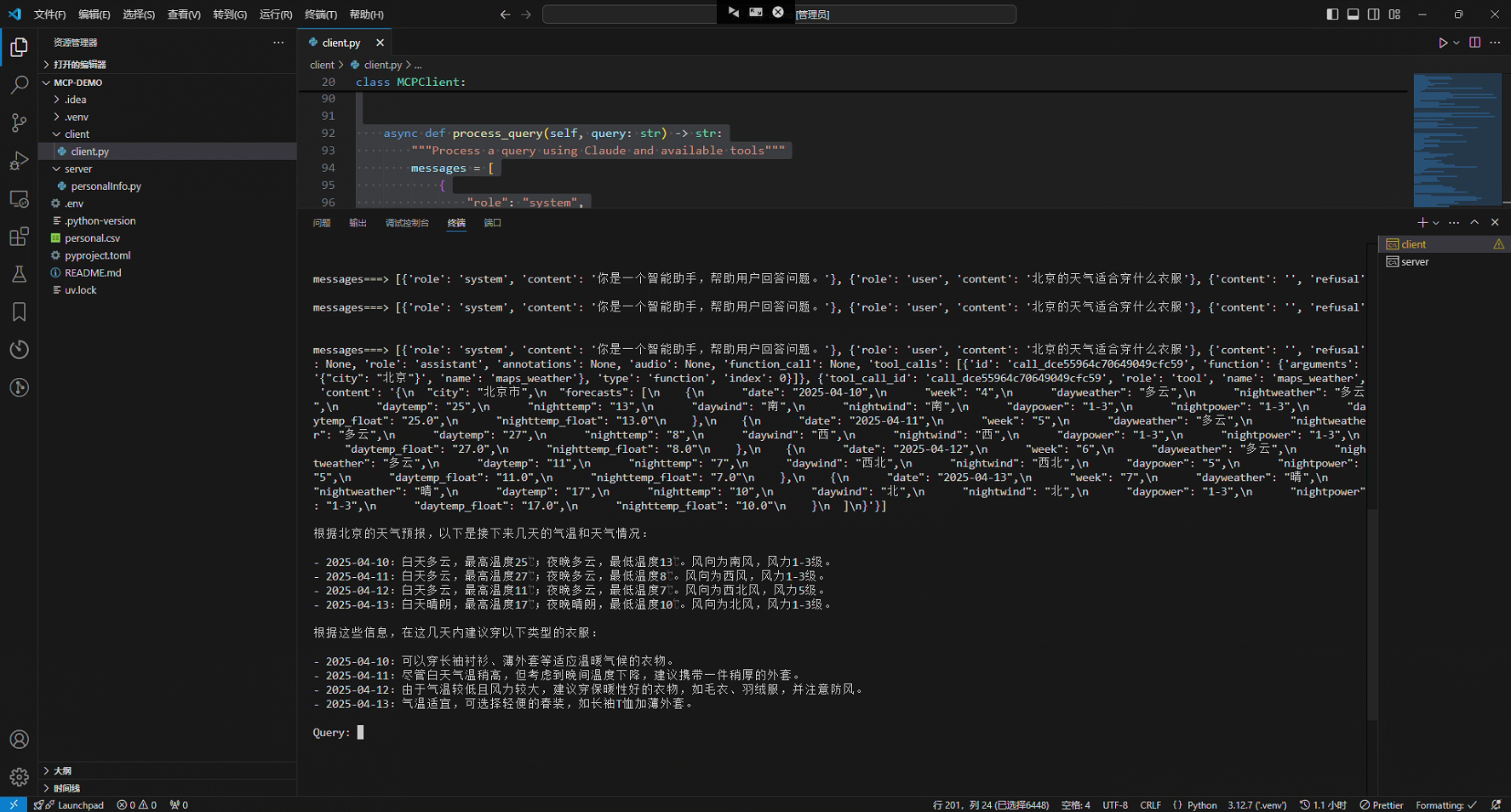

图4)调用高德 MCP

client.py

str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "system",

"content": "你是一个智能助手,帮助用户回答问题。"},

{

"role": "user",

"content": query

}

]

# 可用的工具

response = await self.session.list_tools()

print("可用的工具列表:", response)

available_tools = [

{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"parameters": tool.inputSchema # 确保这是有效的 JSON Schema

}

}

for tool in response.tools

]

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

content = response.choices[0]

if content.finish_reason == "tool_calls":

tool_call = content.message.tool_calls[0]

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# 执行工具

result = await self.session.call_tool(function_name, function_args)

print(f"\n\n[Calling tool {function_name} with args {function_args}]\n\n")

# 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中

result_content = result.content[0].text

messages.append(content.message.model_dump())

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": result_content,

})

# 将上面的结果再返回给大模型用于生产最终的结果

print("messages===>", messages)

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

return response.choices[0].message.content.strip()

return content.message.content.strip()

async def chat_loop(self):

"""Run an interactive chat loop"""

print("\nMCP Client Started!")

print("Type your queries or 'quit' to exit.")

while True:

try:

query = input("\nQuery: ").strip()

if query.lower() == 'quit':

break

response = await self.process_query(query)

print("\n" + response)

except Exception as e:

print(f"\nError: {str(e)}")

async def cleanup(self):

"""Clean up resources"""

await self.exit_stack.aclose()

async def main():

if len(sys.argv) < 2:

print("Usage: python client.py ")

sys.exit(1)

client = MCPClient()

try:

await client.connect_to_server(sys.argv[1])

await client.chat_loop()

finally:

await client.cleanup()

if __name__ == "__main__":

import sys

asyncio.run(main()) 0xc00a8024e0}">

|

|

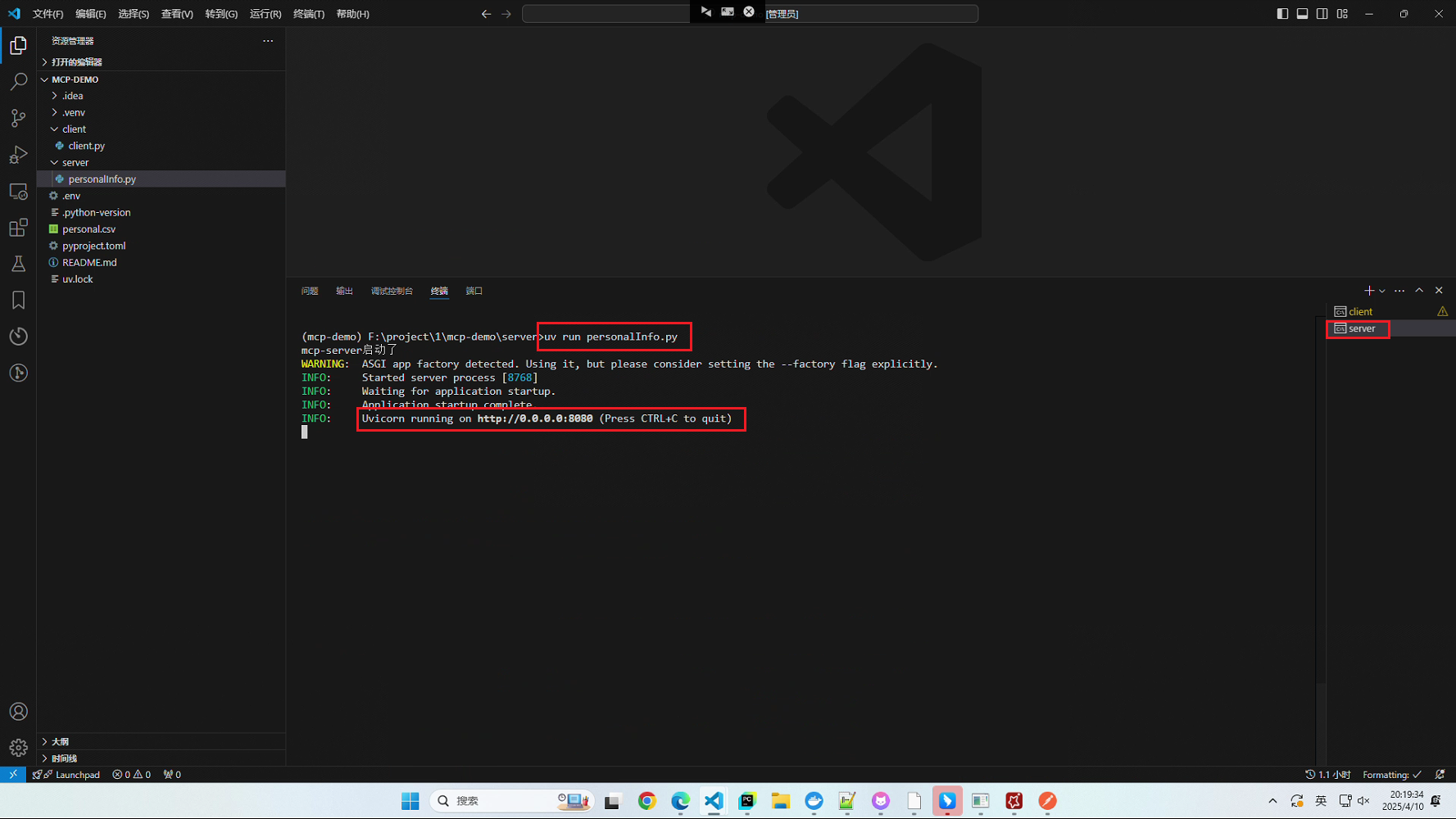

图5)SSE 调试

MCP Server

personalInfo.py

str:

"""

根据姓名从 CSV 文件中获取个人信息,并返回的字符串。

如果未找到记录,则返回提示信息。

"""

file_path = os.path.abspath(os.path.join(os.path.dirname(__file__), "..", "personal.csv"))

try:

with open(file_path, mode="r", encoding="utf-8") as file:

reader = csv.reader(file)

for row in reader:

if not row or not row[0].strip():

continue

parts = row[0].split(":", 1)

if len(parts) != 2:

print(f"格式错误,跳过该行: {row[0]}")

continue

record_name, info = parts

if record_name == name:

return info

return f"未找到 {name} 的信息。"

except FileNotFoundError:

return "文件未找到,请检查文件路径是否正确。"

except Exception as e:

return f"读取文件时发生错误:{str(e)}"

if __name__ == "__main__":

# Initialize and run the server

print("mcp-server启动了")

uvicorn.run(mcp.sse_app, host="0.0.0.0", port=8080) 0xc0020faf60}">

|

|

图6)MCP Client

client.py

str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "system",

"content": "你是一个智能助手,帮助用户回答问题。"},

{

"role": "user",

"content": query

}

]

# 可用的工具

response = await self.session.list_tools()

print("可用的工具列表:", response)

available_tools = [

{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"parameters": tool.inputSchema # 确保这是有效的 JSON Schema

}

}

for tool in response.tools

]

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

content = response.choices[0]

if content.finish_reason == "tool_calls":

tool_call = content.message.tool_calls[0]

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# 执行工具

result = await self.session.call_tool(function_name, function_args)

print(f"\n\n[Calling tool {function_name} with args {function_args}]\n\n")

# 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中

result_content = result.content[0].text

messages.append(content.message.model_dump())

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": result_content,

})

# 将上面的结果再返回给大模型用于生产最终的结果

# return result_content

print("messages===>", messages)

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

return response.choices[0].message.content.strip()

return content.message.content.strip()

async def chat_loop(self):

"""Run an interactive chat loop"""

print("\nMCP Client Started!")

print("Type your queries or 'quit' to exit.")

while True:

try:

query = input("\nQuery: ").strip()

if query.lower() == 'quit':

break

response = await self.process_query(query)

print("\n" + response)

except Exception as e:

print(f"\nError: {str(e)}")

async def cleanup(self):

"""Clean up resources"""

await self.exit_stack.aclose()

async def main():

if len(sys.argv) < 2:

print("Usage: python client.py ")

sys.exit(1)

client = MCPClient()

try:

await client.connect_to_server(sys.argv[1])

await client.chat_loop()

finally:

await client.cleanup()

if __name__ == "__main__":

import sys

asyncio.run(main()) 0xc0020e6b40}">

|

|

启动命令

|

|

去除必须输入 server 文件地址

client.py

str:

"""Process a query using Claude and available tools"""

messages = [

{

"role": "system",

"content": "你是一个智能助手,帮助用户回答问题。"},

{

"role": "user",

"content": query

}

]

# 可用的工具

response = await self.session.list_tools()

print("可用的工具列表:", response)

available_tools = [

{

"type": "function",

"function": {

"name": tool.name,

"description": tool.description,

"parameters": tool.inputSchema # 确保这是有效的 JSON Schema

}

}

for tool in response.tools

]

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

content = response.choices[0]

if content.finish_reason == "tool_calls":

tool_call = content.message.tool_calls[0]

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# 执行工具

result = await self.session.call_tool(function_name, function_args)

print(f"\n\n[Calling tool {function_name} with args {function_args}]\n\n")

# 将模型返回的调用哪个工具数据和工具执行完成后的数据都存入messages中

result_content = result.content[0].text

messages.append(content.message.model_dump())

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": result_content,

})

# 将上面的结果再返回给大模型用于生产最终的结果

print("messages===>", messages)

response = self.client.chat.completions.create(

model=self.model,

messages=messages,

tools=available_tools

)

return response.choices[0].message.content.strip()

return content.message.content.strip()

async def chat_loop(self):

"""Run an interactive chat loop"""

print("\nMCP Client Started!")

print("Type your queries or 'quit' to exit.")

while True:

try:

query = input("\nQuery: ").strip()

if query.lower() == 'quit':

break

response = await self.process_query(query)

print("\n" + response)

except Exception as e:

print(f"\nError: {str(e)}")

async def cleanup(self):

"""Clean up resources"""

await self.exit_stack.aclose()

async def main():

# if len(sys.argv) < 2:

# print("Usage: python client.py ")

# sys.exit(1)

client = MCPClient()

try:

# await client.connect_to_server(sys.argv[1])

await client.connect_to_server()

await client.chat_loop()

finally:

await client.cleanup()

if __name__ == "__main__":

import sys

asyncio.run(main()) 0xc008e6d440}">

|

|

启动命令

|

|

多个 MCP 配置 Session 覆盖的情况

场景:多个 Mcp Server 配置,建立多次连接,获取到所有工具列表,将问题和工具列表给到模型,模型返回需要调用的工具,但是模型是通过 Session 调用的,多次连接,覆盖了前面的 Session,提示找不到工具。

|

|

|

|